STRAIGHT TALK ABOUT ARTIFICIAL INTELLIGENCE WITH KATHRYN HUME AND CAROLE PIOVESAN

By TheWAY - 3월 26, 2018

STRAIGHT TALK ABOUT ARTIFICIAL INTELLIGENCE WITH KATHRYN HUME AND CAROLE PIOVESAN

Kathryn Hume and Carole Piovesan are powerful forces in the Toronto artificial intelligence community. Kathryn Hume is Vice President of product and strategy for Integrate.ai and a venture partner at ffVC, a seed and early-stage technology venture capital firm. Carole Piovesan is a lawyer at McCarthy Tétrault LLP and the firm’s lead in the area of artificial intelligence. Piovesan has appeared before various administrative tribunals, at all levels of court in Ontario, as well as at the Supreme Court of Canada. Hume and Piovesan are widely respected speakers and writers on AI and excel at communicating how AI and machine learning technologies work in everyday language.

Kathryn Hume and Carole Piovesan are powerful forces in the Toronto artificial intelligence community. Kathryn Hume is Vice President of product and strategy for Integrate.ai and a venture partner at ffVC, a seed and early-stage technology venture capital firm. Carole Piovesan is a lawyer at McCarthy Tétrault LLP and the firm’s lead in the area of artificial intelligence. Piovesan has appeared before various administrative tribunals, at all levels of court in Ontario, as well as at the Supreme Court of Canada. Hume and Piovesan are widely respected speakers and writers on AI and excel at communicating how AI and machine learning technologies work in everyday language.

As the world seeks to understand the ramifications of artificial intelligence, many want to know how an AI revolution will reshape our world. Piovesan and Hume will be sharing their thoughts at Osgoode Professional Development’s Artificial Intelligence: Confronting the Legal and Ethical Issues of AI in Business (April 25) program, where they will be joined by Brian Kuhn (Global Leader, IBM Watson Legal) and Julie Chapman (General Counsel, Canada and Chief Privacy Officer, Lexis Nexis Canada).

Kathryn Hume and Carole Piovesan recently spoke with OsgoodePD Program Lawyer, Amy ter Haar, about what AI means to them for an upcoming episode of Strictly Legal, OsgoodePD’s podcast on all things legal. Here’s an excerpt of that conversation and a sneak peek at what you can expect at the upcoming AI program.

What does AI mean to you?

Carole Piovesan: Artificial intelligence is a sophisticated computer system that crunches a whole bunch of data and uses that data to make meaningful predictions.

What is interesting from a legal perspective, however, is that an AI system can analyze data, make predictions and, in some cases, and increasingly, act upon the predictions. In such a case, there is an interesting relationship between the system and the human. From a legal standpoint, what does it mean when the human is no longer at the centre of the action?

AI provides a host of opportunities in terms of making processes more efficient, deriving great meaning from data that already exists, but then increasingly using that information to act upon it in an independent way poses some really interesting legal questions.

Kathryn Hume: AI is a murky enough term that we exercise agency in how we choose to define it, colouring the issues that come to the fore and the questions that we ask about the technology. While it’s jocular, I like Nancy Fulda’s definition that “AI is whatever computers can’t do until they can.” This definition shows how AI is more a psychological term than a technical term, more about what we consider to be intelligence than any rigorous technical capability, as many underlying technologies power products marketers refer to as artificial intelligence.

It also bakes a notion of progress into the definition, showing that it’s a moving target. These technologies are advancing so quickly that it is hard to have a rigorous technical definition to say that ‘x’ technique qualifies as artificial intelligence and ‘y’ doesn’t anymore.

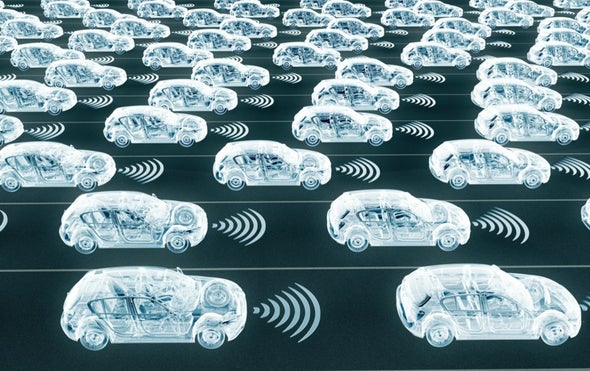

A great example is Google Maps, which most of us no longer think of as artificial intelligence because we’ve become accustomed to see it as plain-old technology—it’s powered by some of the most sophisticated data and machine learning algorithms out there. Yet, we currently consider self-driving cars to be a form of AI because they’re just on the horizon of conceptual possibility.

There have been multiple underlying technologies that have qualified under the general rubric of AI historically, from deterministic methods based upon predicated logic to expert systems that encode common rules in a given domain. We currently associate machine learning with AI, which, as Carole mentioned, is a subfield of computer science where computers improve their performance upon a specified task as they receive new data.

Hear more from Kathryn Hume and Carole Piovesan at OsgoodePD’s Artificial Intelligence: Confronting the Legal and Ethical Issues of AI in Business, April 25. Grab your ticket and take 10% off with code BETAKIT10.

SOURCE: https://betakit.com/straight-talk-about-artificial-intelligence-with-kathryn-hume-and-carole-piovesan/